Self-host vs PostHog Cloud:

Self-hosting PostHog means managing the service yourself, taking care of upgrades, scaling, security etc.

If you are less technical or looking for a hands-off experience, and if you want to optimize your budget, we recommend PostHog Cloud.

PostHog Cloud is also considerably cheaper than paying for cloud hosting. You can find more information about PostHog Cloud's costs on the pricing page.

You can also find support partners to manage the service for you via the PostHog Marketplace.

DigitalOcean is one of the most well-established Cloud Providers. Compared to AWS, GCP and Azure where the amount of options and configuration can be overwhelming, DigitalOcean is generally simpler to use and faster to get running.

The first thing you'll need is a get a DigitalOcean account. You can click on the badge below to get US$100 in credit over 60 days (i.e. run PostHog for free for ~2 months).

Then you can either follow the 1-click install or the manual install.

1-click install

Click here to deploy PostHog using the 1-click install. The DigitalOcean UI will ask you if you want to install PostHog on an already provisioned Kubernetes cluster or if you want to create a new one.

Once the setup is completed, you can pick one of those two methods to fetch the URL of your fresh new PostHog installation:

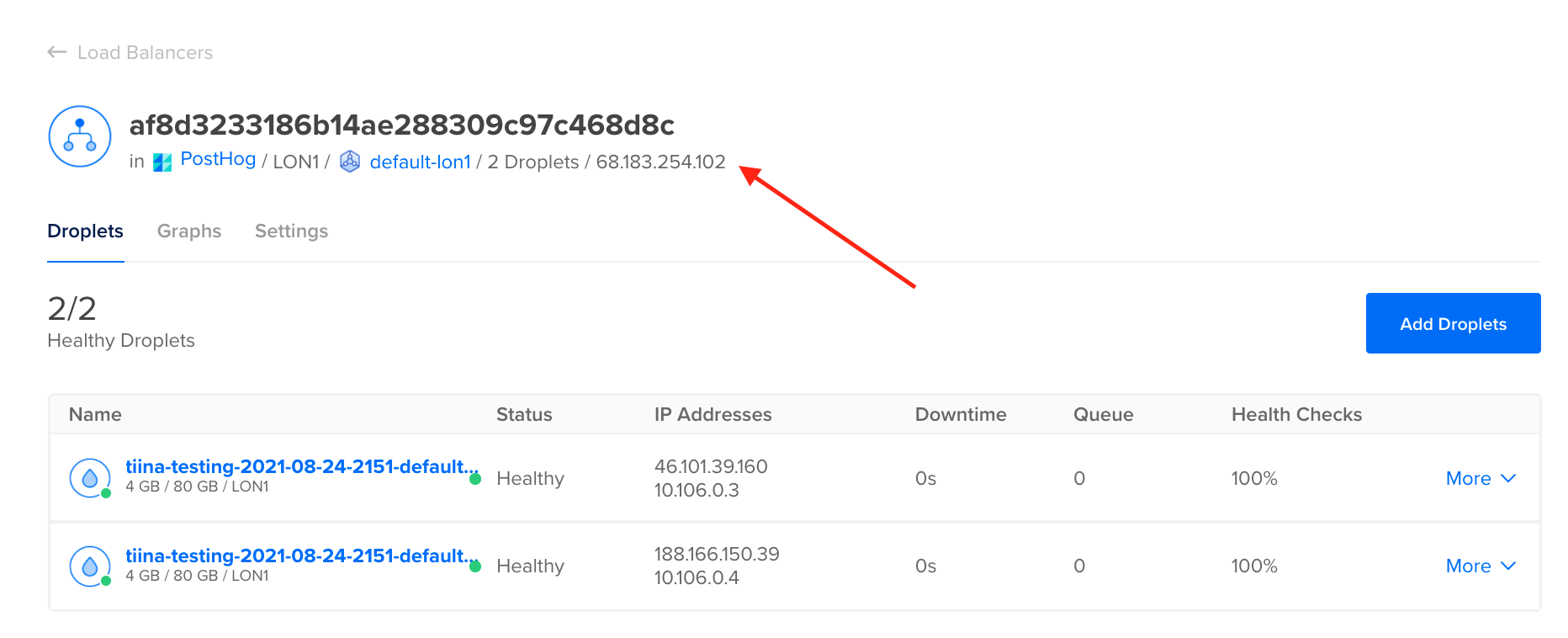

In the DigitalOcean console, go to the

Resourcestab of your Kubernetes cluster and click on the load balancer assigned to the cluster. This will bring you to the (networking tab) where you can get the IP address of the load balancer created by Kubernetes:

If you have configured

kubectlaccess to your cluster, run the following commands:TerminalPOSTHOG_IP=$(kubectl get --namespace posthog ingress posthog -o jsonpath="{.status.loadBalancer.ingress[0].ip}" 2> /dev/null)POSTHOG_HOSTNAME=$(kubectl get --namespace posthog ingress posthog -o jsonpath="{.status.loadBalancer.ingress[0].hostname}" 2> /dev/null)if [ -n "$POSTHOG_IP" ]; thenPOSTHOG_INSTALLATION=$POSTHOG_IPfiif [ -n "$POSTHOG_HOSTNAME" ]; thenPOSTHOG_INSTALLATION=$POSTHOG_HOSTNAMEfiif [ ! -z "$POSTHOG_INSTALLATION" ]; thenecho -e "\n----\nYour PostHog installation is available at: http://${POSTHOG_INSTALLATION}\n----\n"elseecho -e "\n----\nUnable to find the address of your PostHog installation\n----\n"fi

Note: before using PostHog in production remember to secure your 1-click installation. Without securing your instance you will only be able to test web apps on HTTP such as those running on

localhost.

Securing your 1-click install

It's unfortunately not yet possible to provide parameters to DigitalOcean 1-click installations, so we need a post-install step to enable TLS. In order to do this you will need kubectl and Helm installed and have configured your kubectl access.

1. Update PostHog

Create a values.yaml file in the current directory with the following content:

Note: if you are planning to use our GeoIP integration, please also add the snippet below

to enable proxy protocol support in the load balancer and in the nginx ingress controller:

and since you started with the one-click deploy you'll need the Helm chart locally, so run:

now you can upgrade your cluster with the new values:

2. Install ClusterIssuer

Create a new cluster resource that will take care of signing your TLS certificates using Let’s Encrypt.

Create a new file called

cluster-issuer.yamlwith the following content. Note: please remember to replaceyour-name@domain.comwith a valid email address as you will receive email notifications on certificate renewals:cluster-issuer.yamlapiVersion: cert-manager.io/v1kind: ClusterIssuermetadata:name: letsencrypt-prodspec:acme:email: "your-name@domain.com"server: https://acme-v02.api.letsencrypt.org/directoryprivateKeySecretRef:name: posthog-tlssolvers:- http01:ingress:class: nginxDeploy this new resource to your cluster by running:

kubectl apply -f cluster-issuer.yaml

3. Lookup the address of the installation

Note: due to a limitation in the DigitalOcean ingress controller, the second method will not work if you have enabled the settings to support the GeoIP integration. Please use the web UI to get your IP address.

4. Setting up DNS

Do not use

posthogor tracking related words as your sub-domain record: As we grow, PostHog owned domains might be added to tracker blockers. To reduce the risk of tracker blockers interfering with events sent to your self-hosted instance, we suggest to avoid using any combination of potentially triggering words as your sub-domain. Examples of words to avoid are:posthog,tracking,tracker,analytics,metrics.

Create the record of your desired hostname pointing to the address found above. After around 30 minutes (required to request, receive and deploy the TLS certificate) you should have a fully working and secure PostHog instance available at the domain record you've chosen!

Install via doctl

If you have doctl already configured you can simply run:

This will also configure your kubectl access.

Manual install

First, we need to set up a Kubernetes cluster (see the official DigitalOcean documentation for more info).

Cluster requirements

Kubernetes version >=1.23 <= 1.25

Kubernetes nodes:

- ensure you have enough resources available (we suggest a total minimum of 4 vcpu & 8GB of memory)

- ensure you can run

x86-64/amd64workloads.arm64architecture is currently not supported

Suggestion: ensure

allowVolumeExpansionis set toTruein the storage class definition (this setting enablesPVCresize)Details

PersistentVolumescan be configured to be expandable. This feature when set totrue, allows the users to resize the volume by editing the correspondingPersistentVolumeClaimsobject.This can become useful in case your storage usage grows and you want to resize the disk on-the-fly without having to resync data across PVCs.

To verify if your storage class allows volume expansion you can run:

Terminalkubectl get storageclass -o json | jq '.items[].allowVolumeExpansion'trueIn case it returns

false, you can enable volume expansion capabilities for your storage class by running:TerminalDEFAULT_STORAGE_CLASS=$(kubectl get storageclass -o=jsonpath='{.items[?(@.metadata.annotations.storageclass\.kubernetes\.io/is-default-class=="true")].metadata.name}')kubectl patch storageclass "$DEFAULT_STORAGE_CLASS" -p '{"allowVolumeExpansion": true}'storageclass.storage.k8s.io/gp2 patchedN.B:

- expanding a persistent volume is a time consuming operation

- some platforms have a per-volume quota of one modification every 6 hours

- not all the volume types support this feature. Please take a look at the official docs for more info

Suggestion: ensure

reclaimPolicyis set toRetainin the storage class definition (this setting allows for manual reclamation of the resource)The

Retainreclaim policy allows for manual reclamation of the resource. When the PersistentVolumeClaim is deleted, the PersistentVolume still exists and the volume is considered "released". But it is not yet available for another claim because the previous claimant's data remains on the volume (see the official documentation).This can become useful in case your need to reprovision a pod/statefulset but you don't want to lose the underlying data

To verify which

reclaimPolicyyour default storage class is using you can run:Terminalkubectl get storageclass -o json | jq '.items[].reclaimPolicy'"Retain"If your storage class allows it, you can modify the

reclaimPolicyby running:TerminalDEFAULT_STORAGE_CLASS=$(kubectl get storageclass -o=jsonpath='{.items[?(@.metadata.annotations.storageclass\.kubernetes\.io/is-default-class=="true")].metadata.name}')kubectl patch storageclass "$DEFAULT_STORAGE_CLASS" -p '{"reclaimPolicy": "Retain"}'storageclass.storage.k8s.io/gp2 patched

Note: in order to reduce the overhead of managing stateful services like PostgreSQL, Kafka, Redis and ClickHouse by yourself, we suggest you to run them outside Kubernetes and offload their provisioning, building and maintenance operations:

- for PostgreSQL, take a look at DigitalOcean managed databases

- for Apache Kafka, take a look at Confluent Cloud

- for Redis, take a look at Redis Enterprise Cloud

- for ClickHouse, take a look at Altinity Cloud

1. Chart configuration

Here's the minimal required values.yaml that we'll be using later. You can find an overview of the parameters that can be configured during installation under configuration.

Note: if you are planning to use our GeoIP integration, please also add the snippet below

to enable proxy protocol support in the load balancer and in the nginx ingress controller:

2. Install the chart

To install the chart using Helm with the release name posthog in the posthog namespace, run the following:

Note: if you decide to use a different Helm release name or namespace, please keep in mind you might have to change several values in

your values.yaml in order to make the installation successful. This is because we build several Kubernetes resources

(like service names) using those.

3. Lookup the address of the installation

Note: due to a limitation in the DigitalOcean ingress controller, the second method will not work if you have enabled the settings to support the GeoIP integration. Please use the web UI to get your IP address.

4. Setting up DNS

Do not use

posthogor tracking related words as your sub-domain record: As we grow, PostHog owned domains might be added to tracker blockers. To reduce the risk of tracker blockers interfering with events sent to your self-hosted instance, we suggest to avoid using any combination of potentially triggering words as your sub-domain. Examples of words to avoid are:posthog,tracking,tracker,analytics,metrics.

Create the record of your desired hostname pointing to the address found above. After around 30 minutes (required to request, receive and deploy the TLS certificate) you should have a fully working and secure PostHog instance available at the domain record you've chosen!

Upgrading the chart

To upgrade the Helm release posthog in the posthog namespace:

Get and update the Helm repo:

Terminalhelm repo add posthog https://posthog.github.io/charts-clickhouse/helm repo updateVerify if the operation is going to be a major version upgrade:

Terminalhelm list -n posthoghelm search repo posthog

Compare the numbers of the Helm chart version (in the format posthog-{major}.{minor}.{patch} - for example, posthog-19.15.1) when running the commands above. If the upgrade is for a major version, check the upgrade notes before moving forward.

Run the upgrade

Terminalhelm upgrade -f values.yaml --timeout 30m --namespace posthog posthog posthog/posthog --atomic --wait --wait-for-jobs --debug

Check the Helm documentation for more info about the helm upgrade command.

Uninstalling the chart

To uninstall the chart with the release name posthog in posthog namespace, you can run: helm uninstall posthog --namespace posthog (take a look at the Helm docs for more info about the command).

The command above removes all the Kubernetes components associated with the chart and deletes the release.

Sometimes everything doesn't get properly removed. If that happens try deleting the namespace: kubectl delete namespace posthog.

Next steps

Now that your deployment is up and running, here are a couple of guides we'd recommend you check out to fully configure your instance.